Today we are coming to you with a special edition of this newsletter, announcing a new tool for accessing and understanding U.S. state standardized testing data. The tool is called Zelma, and it’s an extension of the COVID-19 School Data Hub in partnership with Novy.ai.

Over the past several years, during and after the pandemic, our team has spent a lot of time working with state assessment data. State standardized testing in the U.S. is the most comprehensive view we have of student performance – it illustrates differences across schools, districts and demographic groups. These data are taken universally and in every year. They are a rich source of information on how our students are doing.

Unfortunately, the data is messy: formatted differently in every state, often difficult to access and hard to explore in depth.

So - to better access the incredible amount of data available through school testing data - I’d like to introduce Zelma. Zelma is an AI tool which allows anyone to use natural language to get insights from the data - charts, graphs, tables. Our goal is to make this data as transparent as possible, so we can make better decisions for our kids.

The tool can be accessed at Zelma.ai if you want to go right there to explore and experiment, check out our FAQs, and watch the Zelma intro video. If you want to go even deeper, we’ll be hosting a webinar about the tool at 1 p.m. ET/10 a.m. PT today. Register for the webinar here (we’ll send a recording after to everyone who registers).

Developing Zelma

During the pandemic, our team spent a lot of time on data from schools. This project began by studying COVID rates in schools, but beginning in the summer of 2021, we pivoted to trying to document and assemble a full database on the patterns of reopening over the 2020-2021 school year. In the end, we assembled the most comprehensive database on school reopening patterns during that year.

Over the past two years, we’ve turned our focus to the impacts of COVID on student academic performance. Although test performance is only one student outcome, it is an important one, and the COVID learning losses have been historic.

As we did this, it became clear to us that there was a need for better data accessibility, in particular for state assessment data. State standardized testing in the U.S. is the most comprehensive view we have of performance at the district and demographic group level. Because state tests are taken annually by nearly all students in grades 3-8, they are an incredibly rich source of information about how students are doing academically. A major downside is that these tests are not comparable across states, the data can be difficult to access, and the files are often incomplete or use different formats from year to year.

We set out to create a user-friendly tool to provide a window into the extensive amount of state testing data in the U.S. Over the past year, our team at the COVID-19 School Data Hub — led by the extraordinary Clare Halloran — has cleaned and harmonized every state’s publicly available standardized testing data. For each state, we have standardized variable names across years and subjects, integrated all available subgroup information (e.g. data by race/ethnicity or economic status), and incorporated both state IDs and National Center for Education Statistics (NCES) IDs. So while we cannot make the tests comparable across states (the tests themselves are different), we can make each state’s data easier to work with over time. If you’re a researcher and you want to use these data, we’ve got you covered with clean, downloadable files for all states.

During this process, however, we realized that to make insights from these data truly accessible, we needed to do more than provide data files. We needed a way for everyone to be able to learn from the data themselves.

This is where Zelma.ai comes in. We partnered with an outstanding developer team at Novy.ai to build a ChatGPT-powered AI tool that can generate data insights. Zelma is designed to write code to query the database, based on your questions. If you ask, say, “What are the top 5 school districts in Connecticut in math in 2023?”, for example, Zelma will return the graph below.

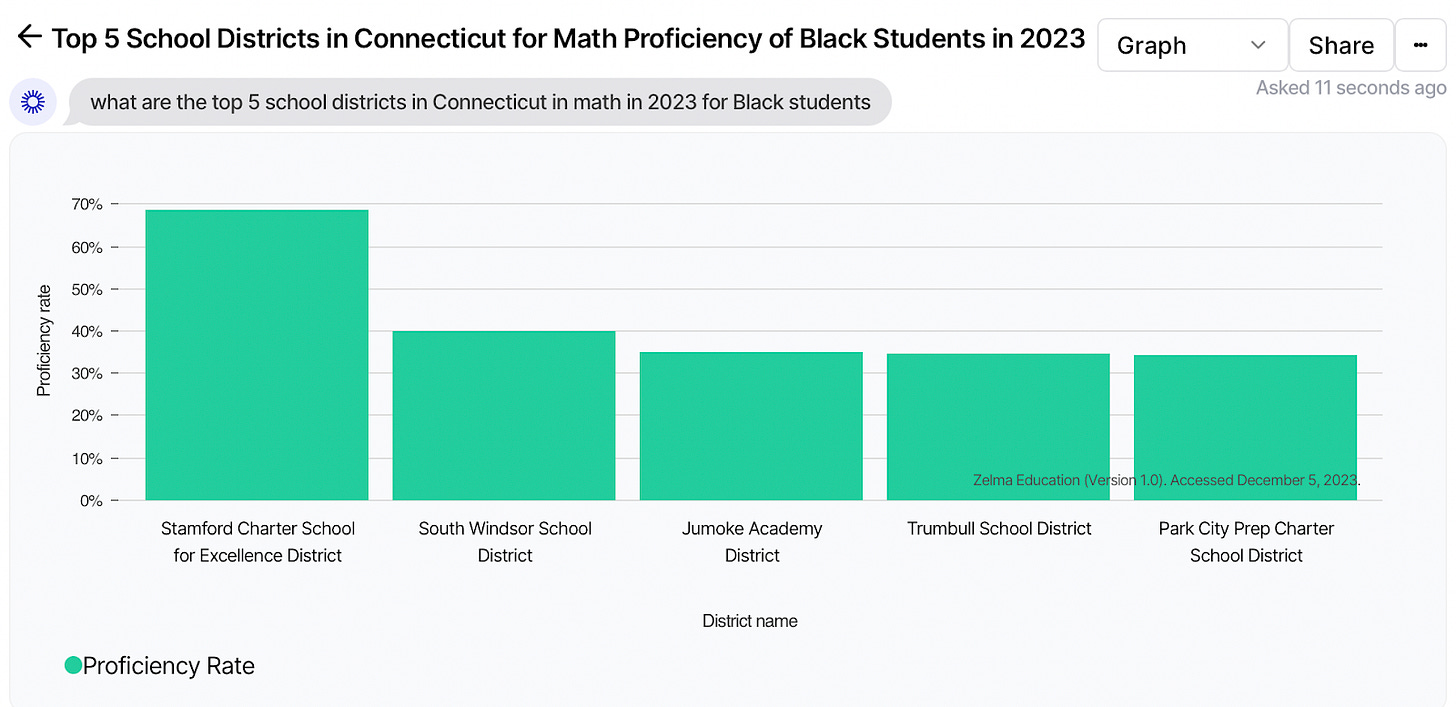

You can ask follow-up questions — for example, you can ask the above question for Black students only:

You can even refine this query to explore results to focus only on traditional public schools rather than charter schools:

Zelma can make graphs that display data over time, or it can look at schools within a district. It knows what an “achievement gap” is, so you can ask for achievement gap estimates within a state or district. I hope it will have answers to your questions, so please try! The more you ask, the better Zelma will get at knowing what you want.

Limitations

It is worth a couple of notes on the limitations of this tool.

The first limitation is also a strength, in our view. Zelma can write SQL code to query only the test score database. What that means is, it can’t make up test scores or proficiency rates, and it can’t incorporate other data, such as graduation rates or community employment rates. It’s got an incredible amount of assessment data, and it can show you. That’s it!

A second limitation is with the data. Our team has done everything we can to get as much data as possible, but not every state reports all of its data. For privacy reasons, all states suppress numbers for some small groups, and the degree of this varies. Some states do not report data by race/ethnicity and others do not report data by grade level. One state told us that they couldn’t give us the counts of students who took its test — even the total for the state — due to privacy concerns. We have done our best to include all available data in our data files, but some of these limitations mean that the queries may not be able to produce a figure for all subjects for all years. We are continuing to fill important gaps in the data. If your state is missing data, we welcome help in pushing them to provide it!

A final note on the limitations of the data - states often need to change their state assessments, or refrain from publishing results in a given year and subject if they are field testing a new test. In these cases, data are not available and you will see gaps in the visualizations. We have done our best to capture these changes, which affect comparability over time, below all figures - you’ll see these under “Notable Events.”

Going Forward and Thank Yous

We’ve got lots of ideas for how to make this tool even better, and we want yours too. Please share your feedback here — the good and the bad. Thanks!

This project is in partnership with Novy.ai, and has benefited from support from OpenAI. The data team is led by Clare Halloran, assisted by Megan Chen, Meghan Cornacchia, Maggie Jiang, Mira Mehta, Benjamin Moshes, Min Namgung, Jay Philbrick, Joshua Silverman and Will Tolmie.

Hi Jamie! We don't have plans at this time to incorporate data from alternate state assessments, but we hope to be able to integrate data in the future for additional student subgroups on the traditional assessments, including outcomes for students with disabilities.

Why is Maine data excluded?